Hey ![]() I’m working with some fairly large documents (up to 1000 pages) where the headers must guide through the readers the sections, chapters and paragraphs.

I’m working with some fairly large documents (up to 1000 pages) where the headers must guide through the readers the sections, chapters and paragraphs.

(Example: Section 3 · Chap. F · Par. 25 — Section 3 · Chapter G · Par. 2)

Thanks to the support of three very helpful members here, I was able to implement a solution with query like so: How to get the number of the first and last paragraph on a page and show the result in the header?

The main part to get the paragraph numbers for the header looks like this (full code at the end):

// Helper Functions

#let get-short(label, x) = {

let shorts = query(selector(label).before(x.location()))

return shorts.at(-1, default: none)

}

#let shorts-and-id(x) = {

let h1 = get-short(<h1-short>, x)

let h2 = get-short(<h2-short>, x)

return (h1: h1.value, h2: h2.value, id: x.value)

}

// Chunk of the query code

let pars = query(selector.or(<h4-start>, <h4-end>))

.filter(x => x.location().page() == here().page())

.map(shorts-and-id)

.dedup()

if pars.len() == 0 {

// No paragraph starting or ending on this page

// -> check if a paragraph starts before and ends after this page

let prevs = query(selector(<h4-start>).before(here()))

let nexts = query(selector(<h4-end>).after(here()))

if prevs.len() == 0 or nexts.len() == 0 {

return

}

The problem with the solution is, that compilation times grow so extensively (cubic or even higher), that the documents quickly become uncompileable.

I didn’t notice that at first, because I worked only with draft parts. When I tried to compile one of the actual documents, it didn’t finish in +5 hours and I had to cancel the compilation process.

The minimal example code (you’ll find a copy at the end) generates the following results on my M1 MacBook (measured with time for typst compile in zsh):

1 Section: 0,64s user 0,07s system 95% cpu 0,743 total

2 Sections: 4,99s user 0,46s system 127% cpu 4,264 total

3 Sections: 27,57s user 3,39s system 141% cpu 21,922 total

4 Sections: 72,40s user 7,25s system 145% cpu 54,725 total

5 Sections: 151,57s user 24,86s system 135% cpu 2:09,92 total

6 Sections: 257,02s user 60,35s system 133% cpu 3:57,47 total

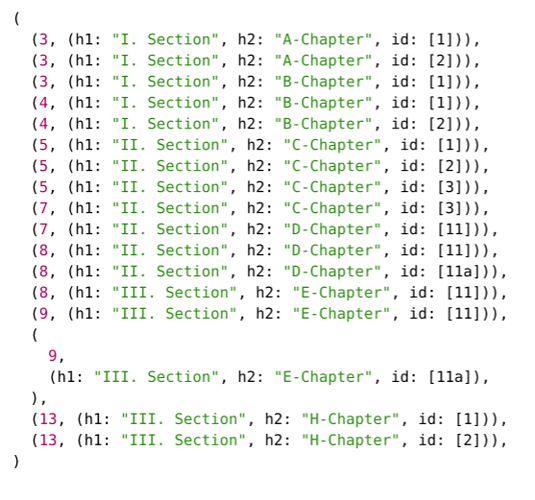

So while the amount of content went up 6x, the CPU user time went up 400x. Showing a bottleneck that scales cubic or even higher, quickly leading to unworkable compile times for the actual documents… ![]()

- Is there maybe an error or ineffieciency in my code that leads to this (maybe parts like

.before(here()are to open ended)? - How can I optimize the

querycode to display the Section/Chapter/Paraphs with compile times that don’t feel like I’m mining Bitcoin on my poor laptop?

You can quickly test/reproduce this with the following MWE ![]() :

:

Full Code Example 📋

// Helper Functions

#let get-short(label, x) = {

let shorts = query(selector(label).before(x.location()))

return shorts.at(-1, default: none)

}

#let shorts-and-id(x) = {

let h1 = get-short(<h1-short>, x)

let h2 = get-short(<h2-short>, x)

return (h1: h1.value, h2: h2.value, id: x.value)

}

// Output assembly

#let header-content(a, b) = {

return [#a.h1, #a.h2, Par. #a.id --- #b.h1, #b.h2, Par. #b.id]

}

// Actual Query

#set page(

width: 120mm,

height: 100mm,

header: context {

let pars = query(selector.or(<h4-start>, <h4-end>))

.filter(x => x.location().page() == here().page())

.map(shorts-and-id)

.dedup()

if pars.len() == 0 {

// No paragraph starting or ending on this page

// -> check if a paragraph starts before and ends after this page

let prevs = query(selector(<h4-start>).before(here()))

let nexts = query(selector(<h4-end>).after(here()))

if prevs.len() == 0 or nexts.len() == 0 {

return

}

let prev = shorts-and-id(prevs.last())

let next = shorts-and-id(nexts.first())

if prev != next {

return

}

return header-content(prev, next)

}

return header-content(pars.first(), pars.at(-1, default: none))

},

)

// The Elements

#let my-h1(short: none, ..args) = {

heading(level: 1, ..args)

[#metadata(short)<h1-short>]

}

#show heading.where(level: 1): it => {

pagebreak(weak: true)

it

}

#let my-h2(short: none, ..args) = {

heading(level: 2, ..args)

[#metadata(short)<h2-short>]

}

#let my-par(id: none, body) = text({

[#metadata([#id])<h4-start>]

if id != none {

heading(level: 4, id)

}

body

[#metadata([#id])<h4-end>]

hide("")

})

#show heading.where(level: 4): it => it.body + " "

// ⬇️ Test Content (setting num-sections below to 1,2,3…6 should roughly lead to the results in my post)

#let num-sections = 1

// Used chapter letters just for better overview in the example output

// ABCDEFGHIJKLMNOPQRSTUVWXYZ

#let chapter-letters = "ABCDEFGH"

#let num-paragraphs-per-chapter = 25

#let s = 1

#while s <= num-sections {

my-h1(short: "Section " + [#s])[This is Section #s]

let c = 1

for letter in chapter-letters {

my-h2(short: "Chap. " + [#letter])[This is Chapter #letter]

let p = 1

while p <= num-paragraphs-per-chapter {

my-par(id: [#p], lorem(50))

p = p + 1

}

}

s = s + 1

}

(Note: It is set to output 1 section by default, so it doesn’t crash anyones tinymist. You can flexibly set the amount of test output is at the end to reproduce.)